K-Content News

- November 28, 2018

The Future of Interactive AI Contents as Seen Through Naver’s ‘Clova’

The Interface Transition: From Mobile to Voice

One of my favorite TV programs from the 1980s was the American drama series Knight Rider. The car KITT from the show was quite impressive. KITT was an amazing car—it drove by itself (autonomous driving), could understand what people said, and could even talk. Today’s automotive and IT industries are making this science fiction technology into science fact. We now live in an age where we can control our navigation systems while driving using our voices, and can even start our cars and open car doors using voice commands.

The Spread of AI Speakers and AI Speaker Contents

Smartphones were a novelty just 10 years ago, but today most people own at least one. The growth of the AI market today resembles the early days of the smartphone market. Google started selling their Google Home speakers in Korea in October 2018, and with the upcoming launch of similar devices by SKT, Naver, and Kakao the competition is expected to grow fierce in the domestic market. According to domestic market research, around 15% of all households (3 million households) now own AI speakers. [Note 1] Based on the current trend, it is expected that AI speakers will become a ubiquitous device by next year.

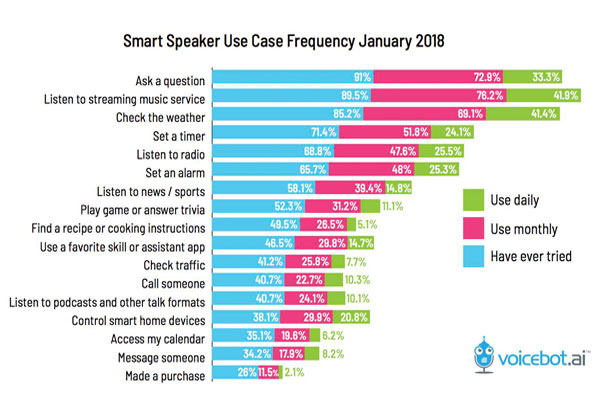

As far as AI speaker contents, audiobooks are now seeing an average annual growth of 20%. This year, the number of audio book searches exceeded 80,000, double the number of searches from two years ago. [Note 2] Naturally, the desire to consume audio contents has spread to the world of speakers. According to a report from voicbot.ai, the most consumed contents on AI speakers are: (1) music, followed by (2) weather and (3) simple questions. The percentage of users who made purchases through AI speakers was relatively low, at 2.1%. [Note 3] These figures were largely similar in the domestic AI speaker market. However, due to shifting trends, there has been increasing demand for fun and emotional interaction in addition to basic music playback. According to Business Insider, in 2017, more than 1 million users had emotional conversations with Alexa, making comments such as ‘marry me’. A speaker usage pattern analysis conducted by company ‘S’ showed that emotional conversations such as ‘I like you’ and ‘I'm bored’ increased, accounting for 4.1% of speaker usage, while music playback declined from 60% to 42%. Other reports have shown that emotional conversations increased by 8% on rainy days, and that negative remarks such as ‘ugh’ increased by 22% on especially hot days. [Note 4]

Future Forecast for AI Contents

As mentioned above, the future of AI contents is quite bright. First, instead of contents just being played or responding to user input, we will increasingly see interactive contents in which AI devices conduct conversations with users, and where users can converse with devices while looking at a screen. With the launch of AI speakers equipped with other devices, we will see even more diverse contents designed for interactive, screen-based use. In the future, AI speakers may be used to bring up recipes, hold a conversation about products during product ordering, and view illustrations for fairy tales.

We are already seeing the adoption of speaker authentication technologies that learn and recognize the voices of different speakers. Once AI speakers are able to distinguish between individual voices, they will be able to provide commercial services tailored specifically to each person. This means that when bringing up a schedule, AI speakers will show different results depending on the family member speaking, and that there will be no more situations where children accidentally order and pay for goods using voice commands. Further, we will see a diversification of AI speaker voices. Currently, devices support only one or two different male and female voices, but with the advancement and more widespread use of voice synthesis technologies, speakers will be able to imitate the voices of celebrities or favorite characters. It is expected that these character voices will be accompanied by “fun” skills. Lastly, we can also expect to see the further diversification of device platforms. Devices able to hear and recognize voice commands will no longer be limited to speakers, but will also include earphones, household appliances, and automobiles (etc.). In the near future, we will be able to tell our vacuum cleaners to clean, and ask our refrigerators to give us recipes.

[Note 1] Correspondent KO, Hyeon-sil (Rapid Growth in Korean AI Speaker Market, Korea Expected to Reach “World Top 5” in The World), Yonhap News, July 12, 2018 News Article.

[Note 2] Correspondent JO, Yoon-ju (Audiobooks are Back with Broader Contents), Financial News, October 21, 2018. News Article.

[Note 3] voicebot.ai, (Smart Speaker Use Frequency Report), January 2018

[Note 4] Correspondent MAENG, Ha-gyeong, (”Marry me”...AI Speakers as Emotional Partners)

Hankook Ilbo. . . News Article.

* For a more detailed explanation of interaction models, please visit the following links for the Clova Development Guide and other materials.

Clova Dev Days Presentation : https://www.slideshare.net/ClovaPlatform/

Clova Dev Days Video : https://www.youtube.com/channel/UCUuTYvmFcwOg06KnH5xx2sQ

Developer’s Guide : https://developers.naver.com/console/clova/guide/CEK/CEK_Overview.md

Distribution Guide : https://developers.naver.com/console/clovaguide/DevConsole/Guides/CEK/Deploy_Extension.md

OK, Sang-hoon | Naver Evangelist | okgosu.exp@navercorp.com